Past Colloquia

See past presentation Abstracts.

Spring 2024

Dr. Richard Ressler,

Teaching Responsible Data Science — A ConversationJanuary 30

Abstract: The AU Data Science Programs added a Learning Outcome for "Responsible Data Science" in December 2021. This talk facilitates a conversation among attendees about how best to help students achieve competency in this learning outcome. It starts with examples from current courses to discuss how we define and teach elements of responsible data science today. It then transitions to conversations with the attendees about what could be done to improve the content and methods of instruction. The final portion expands the conversation to the idea of a department-wide strategy for infusing elements of ethical reasoning into more courses including those that support students based in other departments. Responsible data science integrates diverse perspectives so students, faculty, and staff from across AU are welcome to join the conversation.

Fall 2023

- December 6, 3:00, DMTI 117:

Yao Yao, PhD candidate, University of Iowa

Candidate for the AU Assistant Professor position

Optimization for Fairness-aware Machine Learning

Abstract: Artificial intelligence (AI) and machine learning technologies have been used in high-stakes decision making systems, such as lending decision, criminal justice sentencing and resource allocation. A new challenge arising with these AI systems is how to avoid the unfairness introduced by the systems that lead to discriminatory decisions for protected groups defined by some sensitive variables (e.g., age, race, gender). I propose new threshold-agnostic fairness metrics and statistical distance-based fairness metrics, which is stronger than many existing fairness metrics in literature. Among the techniques for improving the fairness of AI systems, the optimization-based method, which trains a model through optimizing its prediction performance subject to fairness constraints, is most promising because of its intuitive idea and the Pareto efficiency it guarantees when trading off prediction performance against fairness. I develop new stochastic-gradient based optimization algorithms that leverage the unique structure of the model to expedite the training process with theoretical guarantee. Also, I numerically demonstrate the effectiveness of my approaches on real-world data under different fairness metrics. - November 30, 3:00, DMTI 117:

Nathan Wycoff, Data Science Fellow, Massive Data Institute, Georgetown

Candidate for the AU Assistant Professor position

Wresting Interpretability from Complexity with Variable and Dimension Reduction

Abstract: Building interpretable models from data is a core aim of science. But in the pursuit of prediction accuracy, modern techniques in machine learning and computational statistics have become increasingly complex and difficult to interpret. In this talk, we will discuss one strategy for developing simpler models, that of performing dimension reduction, especially through variable selection. We will begin with a general overview of the role of variable selection in science before diving into a new algorithm which can impose quite general sparsity structures as part of any analysis performed via gradient-based optimization. This involves developing a proximal operator, a convex-analytic object which allows us to bring gradient-based methods to bear on certain non-differentiable problems. We then discuss applications of this methodology to problems in vaccination hesitancy and global migration. Finally, we'll pivot to linear dimension reduction, which involves considering not individual input variables, but combinations of them. In particular, we'll develop a technique for gradient-based dimension reduction with Gaussian processes and study its behavior on discontinuous functions, such as Agent-Based Models. - November 28

Dr. Stefaan De Winter, Program Director, National Science Foundation

Projective Two-Weight Sets

Abstract: Occasionally it happens in math that different research areas turn out to be equivalent, usually increasing interest in the topic from both perspectives. A case of this is displayed in Finite Geometry and Coding Theory through the equivalence of what are now called projective two-weight sets and linear two-weight codes (both of these turn out to also be equivalent to a certain type of Cayley graphs). These equivalences were described in great detail in the mid-80s and the topic remains to be popular to this day among finite geometers, coding theorists and graph theorists alike. In this talk I will talk about these equivalences, explain one of the key open problems (from a geometric perspective) and end with a recent contribution of myself. Along the way it will become clear how the fact that different research communities use different terminology for the same object can lead to important results being missed by others. The talk will be aimed at a general math/stat audience and will be self-contained. - October 31

Dr. Melinda Kleczynski, National Institute of Standards and Technology

Topological Data Analysis Of Coordinate-Based And Interaction-Based Datasets

Abstract: A key step for many topological data analysis techniques is to generate a simplicial complex or sequence of simplicial complexes. A common use case is to begin with a set of points, each described by a set of coordinates. Topological analysis of this type of dataset can yield important insights and quantification of structural features. Other types of datasets are also well-suited for these techniques. For example, any system which can be described as a bipartite graph also has a natural representation as a simplicial complex. We will discuss two applications, one involving coordinate-based data and the other involving coordinate-free data. The talk will be suitable for participants without prior experience with topological data analysis. - October 24

Monica Jackson and William Howell, Math/Stat, AUMaria De Jesus, SIS, AU

Kimberly Sellers, Department of Statistics, North Carolina State University

Examining the Role of Quality of Institutionalized Healthcare on Maternal Mortality in the Dominican Republic

Abstract: In this talk, we determine the extent to which the quality of institutionalized healthcare, sociodemographic factors of obstetric patients, and institutional factors affect maternal mortality in the Dominican Republic. We utilize the COM-Poisson distribution (Sellers et al, 2019) and the Pearson correlation coefficient to determine the relationship of predictor factors (i.e., hospital bed rate, vaginal birth rate, teenage mother birth rate, single mother birth rate, unemployment rate, infant mortality rate, and sex of child rate) in influencing maternal mortality rate. The factors hospital bed rate, teenage mother birth rate, and unemployment rate were not correlated with maternal mortality. Maternal mortality increased as vaginal birth rates and infant death rates increased whereas it decreased as single mother birth rates increased. Further research to explore alternate response variables, such as maternal near-misses or severe maternal morbidity is warranted. Additionally, the link found between infant death and maternal mortality presents an opportunity for collaboration among medical specialists to develop multi-faceted solutions to combat adverse maternal and infant health outcomes in the DR. - October 3

Dr. Natalie Jackson, Vice President, GQR

How To Be An Informed Poll Consumer

Abstract: Political polls are everywhere, even when elections are a long time away, and they seem to get more prevalence than they probably deserve given past struggles with accuracy. In order to be an informed consumer in this corner of political media, you have to know a little statistics. In this talk, Natalie will discuss the statistical underpinnings of polling methodology, why those have fallen apart in recent years, and what pollsters are doing differently now. After this talk, you will be prepared to think critically about polls and politics for the upcoming 2024 election. - September 26

Dr. Raza Ul Mustafa, American University

Social Media Using Transformer Architecture

Abstract: Coded language evolves quickly, and its use varies over time. Such content on social media contributes to a toxic online environment and fosters hatred, which leads to real-world hate crimes or acts of violence. In this work, we propose a methodology that captures the hierarchical evolution of antisemitism coded terminology (e.g., cultural marxism, globalist, cabal, world economic forum, world order, etc.) and concepts in an unsupervised manner using state-of-the-art large language models. If new text data fits an existing concept, it is added to the already available concept based on contextual similarity. Otherwise, it is further analyzed to determine whether it represents a new concept or a new sub-concept. Experiments conducted over different applied settings show clear patterns that are extremely useful for examining the evolution of hate on social platforms.

- September 19

Dr. Ahmad Mousavi, American University

Mean-Reverting Portfolios with Sparsity and Volatility Constraints

Abstract: Finding mean-reverting portfolios with volatility and sparsity constraints needs minimizing a quartic objective function, subject to nonconvex quadratic and cardinality constraints. For tackling this problem, we present a tailored penalty decomposition method that approximately solves a sequence of penalized subproblems by a block coordinate descent algorithm. Numerical experiments demonstrate the efficiency of the proposed method.

- September 5

Dr. Zeynep Kacar, American University

Dissecting Tumor Clonality in Liver Cancer: A Phylogeny Analysis using Statistical and Computational Tools

Abstract: Liver cancer is a heterogeneous disease characterized by extensive genetic and clonal diversity. Understanding the clonal evolution of liver tumors is crucial for developing effective treatment strategies. This work aims to dissect the tumor clonality in liver cancer using computational and statistical tools, with a focus on phylogenetic analysis. Through advancements in defining and assessing phylogenetic clusters, we gain a deeper understanding of the survival disparities and clonal evolution within liver tumors, which can inform the development of tailored treatment strategies and improve patient outcomes. The central data analyses of this research concern the derivation of distinct clones and clustered phylogeny types from the basic genomic data in three independent cancer cohorts.

Spring 2023

- February 7

Stephen D. Casey, American University

Sampling via Sampling Set Generating Functions

Abstract: We develop connections between some of the most powerful theories in analysis, tying the Shannon sampling formula to the Poisson summation formula, Cauchy’s integral and residue formulae, Jacobi interpolation, and Levin’s sine-type functions. The techniques use tools from complex analysis, and in particular, the Cauchy theory and the theory of entire functions, to realize sampling sets Λ as zero sets of well-chosen entire functions (sampling set generating functions). We then reconstruct the signal from the set of samples using the Cauchy-Jacobi machinery. These methods give us powerful tools for creating a variety of general sampling formulae, e.g., allowing us to derive Shannon sampling and Papoulis generalized sampling via Cauchy theory. The techniques developed are also manifest in solutions to the analytic Bezout equation associated with certain multi-channel deconvolution problems, and we show how these lead to multi-rate sampling. We give specific examples of non-commensurate lattices associated with multi-channel deconvolution and use a generalization of B. Ya. Levin’s sine-type functions to develop interpolating formulae on these sets. We then give specific examples of coprime lattices in both rectangular and radial domains, and use generalizations of B. Ya. Levin’s sine-type functions to develop sampling formulae on these sets. We close by discussing how one would extend signal sampling to non-Euclidean domains. -

February 17

Hajime Shimao, McGill University

Welfare Cost of Fair Prediction and Pricing in Insurance Market

Abstract: While the fairness and accountability in machine learning tasks have attracted attention from practitioners, regulators, and academicians for many applications, their consequence in terms of stakeholders' welfare is under-explored, especially via empirical studies and in the context of insurance pricing. General insurance pricing is a complicated process that may involve cost modeling, demand modeling, and price optimization, depending on the line of business and jurisdiction. Fairness and accountability regulatory constraints can be applied at each stage of the insurers’ decision-making. The field so far lacks a framework to empirically evaluate these regulations in a unified way. In this paper, we develop an empirical framework covering the entire pricing process to evaluate the impact of fairness and accountability regulations on both consumer welfare and firm profit, as the link between the predictive accuracy of cost modeling and its welfare consequence is theoretically undetermined for insurance pricing. Applying the empirical framework to a dataset of the French auto insurance market, our main results show that (1) the accountability requirement can incur significant costs for the insurer and consumers; (2) fairness-aware ML algorithms on cost modeling alone cannot achieve fairness in the market price or welfare, while they significantly harm the insurer's profit and consumer welfare, particularly of females; (3) the fairness and accountability constraints considered on the cost modeling or pricing alone cannot satisfy the EU gender-neutral insurance pricing regulation unless we combine the price optimization ban with particular individual fairness notions in the cost prediction. -

February 21

John Nolan, American University

Random Walks and Capacity

Abstract: Random walks are models for how a particle moves when there is uncertainty about its path. We informally describe the most important random walk - Brownian motion. The surprising connection between Brownian motion and harmonic functions has yielded important results in both probability theory and harmonic analysis. In particular, Brownian motion gives formal and computational ways to solve the heat equation and methods of calculating capacity for complicated domains. We describe generalizations of this to stable processes and then give recent results that provide a method of computing Riesz capacity for general sets. This talk will be accessible to undergraduate students. -

February 24, 2023

Evan Rosenman, Harvard Data Science Initiative

Shrinkage Estimation for Causal Inference and Experimental Design

Abstract: How can observational data be used to improve the design and analysis of randomized controlled trials (RCTs)? We first consider how to develop estimators to merge causal effect estimates obtained from observational and experimental datasets, when the two data sources measure the same treatment. To do so, we extend results from the Stein shrinkage literature. We propose a generic "recipe" for deriving shrinkage estimators, making use of a generalized unbiased risk estimate. Using this procedure, we develop two new estimators and prove finite sample conditions under which they have lower risk than an estimator using only experimental data. Next, we consider how these estimators might contribute to more efficient designs for prospective randomized trials. We show that the risk of a shrinkage estimator can be computed efficiently via numerical integration. We then propose algorithms for determining the experimental design -- that is, the best allocation of units to strata -- by optimizing over this computable shrinker risk. -

February 28, 2023

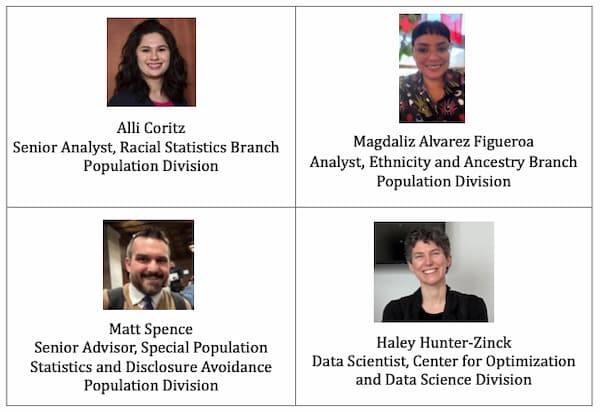

Dr. Krista Park, Special Assistant, Center for Optimization & Data Science, US Census Bureau

Record Linkage in Practice for National Statistics

Abstract: The Census Bureau extensively uses administrative data to increase the quality of statistical products while reducing the respondent burden of direct inquiries. This presentation describes current production record linkage at the Census Bureau, identified areas for improvement, and strategies to improve our capabilities to benefit the Census Bureau, the federal statistical system, and the American public going forward. These advances in the field of entity resolution and record linkage demonstrate the importance of interdisciplinary approaches and teams. -

March 3, 2023

Dr. Kelum Gajamannage, Texas A&M University at Corpus Christi

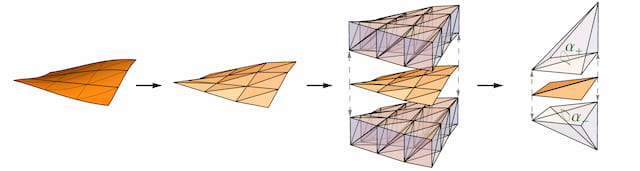

Bounded Manifold Completion

Abstract: Nonlinear dimensionality reduction or, equivalently, the approximation of high dimensional data using a low-dimensional nonlinear manifold is an active area of research. In this talk, I will present a thematically different approach for constructing a low-dimensional manifold that lies within a set of bounds derived from a given point cloud. In particular, rather than constructing a manifold by minimizing some global loss, which has a long history including classic algorithms such as Principal Component Analysis, we construct a manifold of a given dimension satisfying point-wise bounds. A matrix representing distances on a low-dimensional manifold is low rank; thus, our method follows a similar notion as those of the current low-rank Matrix Completion (MC) techniques for recovering a partially observed matrix from a small set of fully observed entries. MC methods are currently used to solve challenging real-world problems such as image inpainting and recommender systems. Our MC scheme utilizes efficient optimization techniques that include employing a nuclear norm convex relaxation as a surrogate for non-convex and discontinuous rank minimization. To impose that the recovered matrix represents the distances on a manifold, we introduce a new constraint to our optimization scheme that ensures the Gramian matrix of the recovered distance matrix is positive semidefinite. This method theoretically guarantees the construction of low-dimensional embeddings and is robust to non-uniformity in the sampling of the manifold. We validate the performance of our method using both a theoretical analysis as well as real-life benchmark datasets. -

March 6, 2023

Dr. Jingyi (Ginny) Zheng, Auburn University, Auburn, Alabama

Statistical Learning for Spatial-temporal data in Biomedical Applications

Abstract: Biomedical data science has been an emerging field in recent years. It focuses on the development of novel methodologies to analyze large-scale biomedical datasets in order to advance biomedical science discovery. Spatial-temporal data is one of the most commonly encountered data types not only in biomedical field but also in a variety of disciplines such as agriculture, computer vision, geosciences, and hydroclimatology. In this talk, I will present three novel methods for analyzing the spatial-temporal data with scalp electroencephalography data as an example. The three methods include automatic determination of the optimal independent components, time-frequency analysis coupled with topological data analysis, and a manifold-based framework for analyzing positive semi-definite matrices. The applicability, efficiency, and interpretability of each method will be demonstrated by extensive simulation and real data application. -

March 22, 2023

Gaël Giraud Director, McCourt School of Public Policy, Georgetown University Senior Environmental Justice Program Professor

Some Applications of Mathematics in Economics

Abstract: We will cover a few applications of mathematics in today's economic modelling. Three topics will be explored: algebraic topology in Game theory; continuous time dynamical systems in macro-economics; stochastic calculus in econometrics. Each topic will be illustrated with several examples. - March 28, 2023

Dr. Karen Saxe, AMS Associate Executive Director and Director of Government Relations

Math & Redistricting—How? Why? What’s New?

Abstract: Gerrymandering is a bipartisan game that strengthens the political power of some and weakens the power of others. We will explore its history, its various forms, and get an overview of how mathematics and statistics are used to detect and prevent it. All those with a high school math background will feel comfortable. - April 4, 2023

Abera Muhamed, Data Scientist, DAFAI

Forecasting Commodity Price Using Kalman Filter Algorithm: The Case of Coffee

Abstract: In coffee-growing countries, high fluctuations in coffee prices have significant effects on their economies. There is a theoretical and practical advantage to conducting a research project on forecasting coffee prices. The purpose of this study is to forecast coffee prices. For the analysis of coffee prices fluctuation, we used daily closed price data recorded from the Ethiopia commodity exchange (ECX) market between 25 June 2008 and 5 January 2017. A single linear state space model is used here to estimate an optimal value for the coffee price since it is non-stationary. Kalman filtering is applied to the model. In this analysis, root mean square error (RMSE) is used to evaluate the performance of the algorithm used for estimating and forecasting coffee prices. Using the linear state space model and Kalman filtering algorithm, the root mean square error (RMSE) is quite small, suggesting that the algorithm performs well. -

April 18, 2023

Dr. Tyler Kloefkorn, Associate Director, American Mathematical Society (AMS)

The federal government makes decisions that affect mathematical sciences research and education—more often than you might think. Funding levels will vary year to year, grant proposal requirements are often adjusted, curriculum reforms are embraced or squashed, and so on. Our community can and should be advised and share its opinion on federal policymaking. This talk will be an overview of the work of the American Mathematical Society’s Office of Government Relations, which focuses on 1) federal advocacy on behalf of the mathematical sciences community and 2) communication to the mathematical sciences community on federal policymaking. We will discuss federal funding for research, current priorities for our education system, and expanding our network of collaborators and stakeholders. - April 25, 2023

Dr. John Boright, Executive Director, International Affairs, The National Academy of Sciences

Convening Expertise and Experience to Inform Decision Making: The Role of the National Academy of Sciences

National and international decision makers need a constant supply of science-based information. And society in general has a similar need—especially in cases of democratic forms of government. And an important part of meeting that need is “convening”, that is bringing together the wide range of expertise and experience needed. So, I will talk about the process of convening and advice, with an emphasis on parts of the system that are within the government and those parts that are outside of government.

Spring 2022

- April 21, Nimai Mehta and Yong Yoon, American University

Meta-Mathematics and Meta-Economics: In Defense of Adam Smith and the Invisible Hand

Abstract : The recent AU Math/Stat colloquium by Gael Giraud (03/22) has inspired us to consider more critically the application of mathematics to economics. Neoclassical economics when seen as applied mathematics has tended to assume the form of a self-contained, closed system of propositions and proofs. Progress in explaining real world markets and institutions, however, has more often been the result of insights that have emerged from outside which, in turn, have led to a reworking of existing theoretical propositions and models. We highlight here the debt that economics science owes to Adam Smith whose early insights on the nature of exchange, the division of labor, and the “invisible hand” continue to help modern economists push the boundaries of the science. We will illustrate the value of Smith’s ideas to economics by showing how they help overcome some of the game-theoretic dilemmas of multiple- equilibria, instability, and non-cooperative outcomes highlighted by Giraud. - April 19, Kateryna Nesvit, American University

Computational Modeling in Data Science: Applications and Education

Abstract: The world around us is full of data, and it is interesting to explore, learn, teach, and use the data efficiently. The first part of this presentation focuses on several of the most productive numerical approaches in real-life web/mobile applications to predict and recommend objects. The second part of the talk focuses on the necessary skills/courses of data science techniques to build these computational models. - April 7, Dr.Aswin Raghavan, Sir International, Princeton NJ

Machine Learning in a changing world: the promise of lifelong MLAbstract: Deep learning has proven to be an effective tool to extract knowledge from large and complex datasets. Most current deep learning methods and the underlying optimization algorithms assume a stationary data distribution, whereas in many real-world applications the data distribution can change over time. For example, new labels corresponding to newly annotated properties of interest can arrive incrementally over time. Specifically, deep neural networks trained in a standard fashion exhibit catastrophic forgetting when presented with “tasks” in an online and sequential manner. Over the past few years, new ML algorithms have been developed under the settings of lifelong learning, continual learning, online or streaming learning. The general goal is to accumulate knowledge over a long lifetime consisting of tasks,

leverage the knowledge in new but similar tasks (forward transfer), and refine the knowledge due to learning new tasks (backward transfer). In this talk, I will introduce the lifelong learning setting and metrics to measure success. The technical portion of the talk will focus on our recent replay-based methods that can recall critical past experiences. Our results show promise in lifelong image classification and lifelong reinforcement learning in the game of Starcraft-2. I will describe the collaborative effort between SRI and AU that lead to further improved results in Starcraft-2. In the final part of the talk, I will discuss some potential applications and impact of lifelong ML

- April 5, Dan Kalman, American University

Generalizing a Mysterious Pattern

F2F Abstract: In his book, Mathematics: Rhyme and Reason, Mel Currie discusses what he calls a mysterious pattern involving the sequence 𝑎𝑛 = 2 𝑛√2 − √2 + √2 + ⋯ + √2 where n is the number of nested radicals. The mystery hinges on the fact that 𝑎𝑛 → 𝜋 as 𝑛 → ∞ . In this talk, we explore a variety of related results. It is somewhat surprising how many interesting extensions, insights, or generalizations arise. Here are a few examples: 2 𝑛√2 − √2 + √2 + ⋯ + √3 → 2𝜋 3 ; 2 𝑛√2 − √2 + √2 + ⋯ + √1 + 𝜑 → 4𝜋 5 ; 2 𝑛√−2 + √2 + √2 + ⋯ + √16⁄3 → 2 ln 3. (Note that 𝜑 is the golden mean, (1 + √5)/2. ) The basis for this talk is ongoing joint work with Currie. - March 22, Gaël Giraud Director, McCourt School of Public Policy, Georgetown University Senior

Environmental Justice Program Professor

Abstract: We will cover a few applications of mathematics in today's economic modelling. Three topics will be explored: algebraic topology in Game theory; continuous time dynamical systems in macro-economics; stochastic calculus in econometrics. Each topic will be illustrated with several examples. Location: Don Myers Technology and Innovation Building

Fall 2022

- December 7, 2022

Zois Boukouvalas, AU

Efficient and Explainable Multivariate Data Fusion for Misinformation Detection During High Impact Events

Abstract: With the evolution of social media, cyberspace has become the de-facto medium for users to communicate during high-impact events such as natural disasters, terrorist attacks, and periods of political unrest. However, during such high-impact events, misinformation on social media can rapidly spread, affecting decision-making and creating social unrest. Identifying the spread of misinformation during high-impact events is a significant data challenge, given the variety of data associated with social media posts. Recent machine learning advances have shown promise for detecting misinformation, however, there are still key limitations that makes this a significant challenge. These limitations include the effective and efficient modeling of the underlying non-linear associations of multi-modal data as well as the explainability of a system geared at the detection of misinformation. In this talk we present a novel multivariate data fusion framework based on pre-trained deep learning features and a well-structured and parameter-free joint blind source separation method named independent vector analysis, that can reliably respond to this set of limitations. We present the mathematical formulation of the new data fusion algorithm, demonstrate its effectiveness, and present multiple explainability case studies using a popular multi-modal dataset that consists of tweets during several high-impact events. - November 29

John T. Rigsby,

Chief Analytics Officer, Defense Technical Information Center

"Data Science Projects at the Defense"

Abstract: The mission of the Defense Technical Information Center (DTIC) is to aggregate and fuse science and technology data to rapidly, accurately and reliably deliver the knowledge needed to develop the next generation of technologies to support our Warfighters and help assure national security. This presentation will cover current efforts of the DTIC Data Science and Analytics Cell to support this mission. -

November 1, Elicia John, AU

"Smartphone Data Reveal Neighborhood-Level

Racial Disparities in Police Presence"

Abstract : While research on policing has focused on documented actions such as stops and arrests, less is known about patrols and presence. We map the neighborhood movement of nearly ten thousand officers across 21 of America’s largest cities using anonymized smartphone data. We find that police spend more time in neighborhoods with predominantly Hispanic, Asian, and – in particular – Black residents. This disparity persists after controlling for density, socioeconomic, and crime-driven demand for policing, and is lower in cities with a higher share of Black police supervisors (but not officers). It is also associated with a higher number of arrests in some of these communities. - October 25, "FDA Cybersecurity, Counterintelligence, and Insider:

Threat Program Overview"

Craig Taylor, US Food and Drug Administration (FDA) Chief Information Security Officer (CISO)

Leah Buckley, FDA (Director, Counterintelligence and Insider Threat) - October 18, Daniel Bernhofen,

Testing the Invisible Hand with a Natural Experiment

Abstract : A central premise of economics is that the market system allocates resources in the right direction, as if directed by an invisible hand. But what is the right direction? The economic subfield of general equilibrium theory has provided an answer to this question via the First Funamental Welfare Theorem, which employs Pareto optimality as a criteria for the right direction and states that a competitive general equilibrium is Pareto optimal. For this reason, the First Fundamental Welfare Theorem has also been labeled the invisible hand theorem and is viewed as a proof of Adam Smith’s famous conjecture that in a market economy individuals are “…led by an invisible hand to promote an end which was no part of (their) intention” (Adam Smith, Wealth of Nations, 1776, vol I, Book IV, Ch II, p.477). A major criticism of the invisible hand theorem is that it holds under very strong conditions and can’t be refuted by the data.

This lecture provides an overview of a research agenda that employs a natural experiment to test some fundamental theorems in international trade, which is a subfield of general equilibrium theory. First, I show that the mathematical structure of these theorems can be summarized as P∙Z>0, which I call the invisible hand inequality. Second, I discuss the relationship between the invisible hand theorem and the invisible hand inequality. Third, I discuss how the 19th century opening up of Japan to international trade after 200 years of self-imposed isolation provides a natural experiment to test the invisible hand inequalities and provide evidence that decentralized markets allocate resources in the (right) direction of comparative advantage.

For a brief background reading for this talk see: Gains from Trade: Evidence from 19th Century Japan.

Spring 2021

- Feb. 9, 2021: Sauleh Siddiqui (AU)

"A Bilevel Optimization Method for an Exact Solution to Equilibrium Problems with Binary Variables" - Feb. 16, 2021: Yei Eun Shin (NIH, NCI)

"Weight calibration to improve efficiency for estimating pure absolute risks from the proportional and additive hazards model in the nested case-control design" - Feb. 23, 2021: Nate Strawn (Georgetown, NIH, NCI)

"Isometric Data Embeddings: Visualizations and Lifted Signal Processing" - Mar. 16, 2021: Stephen D. Casey (AU, Personnel Data Research Institute)

& Thomas J. Casey (AU)

"The Analysis of Periodic Point Processes" - Mar. 23, 2021: Zois Boukouvalas (AU)

"Independent Component and Vector Analyses for Explainable Detection of Misinformation During High Impact Events" - Mar. 30, 2021: Der-Chen Chang (Georgetown)

"Introduction to ̄∂-Neumann Problem" - Apr. 14, 2021: John M. Abowd (US Census Bureau)

Fall 2021

AU Math & Stat Summer 2021 Research ExperiencesOctober 5

"If you talk to these materials, will they talk back?"

Max Gaultieri, Wilson Senior HS

Abstract: This talk will cover the process behind building and writing code for a sonar system. Next the strength and characteristics of sound reflecting off of different materials will be discussed using data collected by the sonar system.

Mentor: Dr. Michael Robinson

Investigation of Affordable Rental Housing across Prince George’s County, Maryland

Zelene Desiré, Georgetown Visitation Preparatory School

Abstract: Prince George’s County residents experience a shortage of affordable rental housing which varies across ZIP codes. This research investigates whether data from the US Census Bureau’s American Community Survey can help in explaining the differences across the county.

Mentor: Dr. Richard Ressler

Investigation of COVID-19 Vaccination Rates across Prince George’s County, Maryland

Nicolas McClure, Georgetown Day School

Abstract: Prince George’s County reports varying rates of vaccinations for COVID-19 across the county’s ZIP codes. This research investigates whether data from the US Census Bureau’s American Community Survey can help in explaining the differences in vaccination rates across the county.

Mentor: Dr. Richard Ressler

"Double reduction estimation and equilibrium tests in natural autopolyploid populations"

October 19 David Gerard, American University

Abstract: Many bioinformatics pipelines include tests for equilibrium. Tests for diploids are well studied and widely available but extending these approaches to autopolyploids is hampered by the presence of double reduction, the co-migration of sister chromatid segments into the same gamete during meiosis. Though a hindrance for equilibrium tests, double reduction rates are quantities of interest in their own right, as they provide insights about the meiotic behavior of autopolyploid organisms. Here, we develop procedures to (i) test for equilibrium while accounting for double reduction, and (ii) estimate double reduction given equilibrium. To do so, we take two approaches: a likelihood approach, and a novel U-statistic minimization approach that we show generalizes the classical equilibrium χ2 test in diploids. Our methods are implemented in the hwep R package on the Comprehensive R Archive Network https://cran.r-project.org/package=hwep.

The talk will be based on the author’s new preprint: https://doi.org/10.1101/2021.09.24.461731

Multiscale mechanistic modelling of the host defense in invasive aspergillosis

October 26

Henrique de Assis Lopes Ribeiro, University of Florida

Abstract: Fungal infections of the respiratory system are a life-threatening complication for immunocompromised patients. Invasive pulmonary aspergillosis, caused by the airborne mold Aspergillus fumigatus, has a mortality rate of up to 50% in this patient population. The lack of neutrophils, a common immunodeficiency caused by, e.g., chemotherapy, disables a mechanism of sequestering iron from the pathogen, an important virulence factor. This paper shows that a key reason why macrophages are unable to control the infection in the absence of neutrophils is the onset of hemorrhaging, as the fungus punctures the alveolar wall. The result is that the fungus gains access to heme-bound iron. At the same time, the macrophage response to the fungus is impaired. We show that these two phenomena together enable the infection to be successful. A key technology used in this work is a novel dynamic computational model used as a virtual laboratory to guide the discovery process. The paper shows how it can be used further to explore potential therapeutics to strengthen the macrophage response.

Supporting the fight against the proliferation of chemicals weapons through cheminformatic

November 2

Stefano Costanzi, American University

Abstract: Several frameworks at the national and international level have been put in place to foster chemical weapons nonproliferation and disarmament. To support their missions, these frameworks establish and maintain lists of chemicals that can be used as chemical warfare agents as well as precursors for their synthesis (CW-control lists). Working with these lists poses some challenges for frontline officers implementing these frameworks, such as export control officers and customs officials, as well as employees of chemical, shipping, and logistics companies. To overcome these issues, we have conceptualized a cheminformatics tool, of which we are currently developing a first functioning prototype, that would automate the task of assessing whether a chemical is part of a CW-control list. This complex work, at the intersection between chemistry and global security, is a collaborative project involving the Stimson Center’s Partnerships in Proliferation Prevention Program and the Costanzi Research Group at American University and is financially supported by Global Affairs Canada.

References

(1) S. Costanzi, G.D. Koblentz, R. T. Cupitt. Leveraging Cheminformatics to Bolster the Control of Chemical Warfare Agents and their Precursors. Strategic Trade Review, 2020, 6, 9, 69-91.

(2) S. Costanzi, C. K. Slavick, B. O. Hutcheson, G. D. Koblentz, R. T Cupitt. Lists of Chemical Warfare Agents and Precursors from International Nonproliferation Frameworks: Structural Annotation and Chemical Fingerprint Analysis. J. Chem. Inf. Model., 2020, 60, 10, 4804-4816

Analytic Bezout Equations and Sampling in Rectangular and Radial Coordinates

November 16

Stephen D. Casey, American University

Abstract: Multichannel deconvolution was developed by C. A. Berenstein et al.as a technique for circumventing the inherent ill-posedness in recovering information from linear translation invariant (LTI) systems. It allows for complete recovery by linking together multiple LTI systems in a manner similar to Bezout equations from number theory. Solutions to these analytic Bezout equations associated with certain multichannel deconvolution problems are interpolation problems on unions of coprime lattices in both rectangular and radial domains. These solutions provide insight into how one can develop general sampling schemes on such sets. We give solutions to deconvolution problems via complex interpolation theory. We then give specific examples of coprime lattices in both rectangular and radial domains, and use generalizations of B. Ya. Levin’s sine-type functions to develop sampling formulae on these sets

Experiencing Medieval Astronomy with an Astrolabe

February 15

Michael Robinson, American University

Abstract: Without a telescope, even in the midst of the city lights, you can still see some of the brightest stars and planets. If you're patient, you can watch the planets move across the sky. Surprisingly, with just these data and a simple tool called an astrolabe you can tell the time, find compass directions, determine the season, and even measure the diameter of planetary orbits. Astrolabes have been made since antiquity, and you can even make your own in AU's Design and Build Lab! (That's what I did!) My astrolabe is patterned off a design popular in medieval England, and whose use is described by the famous poet Geoffrey Chaucer in a letter to his son.

So if you have an astrolabe, what does it do? Just how accurate is it? Can you really use one to measure the solar system? Is the sun really the center of the solar system? Over the past three years, I have used my astrolabe to collect sightings of celestial bodies visible from my back yard, hoping to answer these questions. Although the astronomical tool is primitive, the resulting dataset is ripe for modern data processing and yields interesting insights. I'll explain the whole process from start to finish: building the astrolabe, using it for data collection, and analyzing the resulting data.

Location: Don Myers Technology and Innovation Building (DMTIB), AU East Campus – Room 111

Liars, Damned Liars, and Experts

February 23

Mary Gray & Nimai Mehta, American University

Abstract: The admission of expert opinion by courts meant to assist the trier of facts has enjoyed a checkered history within the Anglo-American legal system. Progress has been achieved where expert testimony proffered was determined by the court to be relevant, material, and competent. Cases where these criteria of admissibility remained undeveloped, or were misapplied in the face of complex evidence, expert testimony has done more harm than good in the search for truth. From Pascal and Fermat to de Moivre, from Bayes to Fisher, probability and data have come together to establish the role of statistics in civil and criminal justice. We explore the role statisticians as expert witnesses have played within the Anglo-American system of justice - in the US courts and in the Indian subcontinent. The evolution of the 1872 Indian Evidence Act has in many ways paralleled the changing rules of evidence and expert testimony in U.S. federal and state statutes. This is evident in the challenges courts in both places have faced, for example, in the application of the Daubert guidelines in cases involving complex, scientific data - in matters of DNA evidence, the environment, public health, etc. Lastly, we look at the extent to which the two legal systems have retained the adversarial system as a check on expert opinion and its misuse.

Fall 2020

9/8/2020: Stephen D. Casey, American University; Norbert Wiener Center University of Maryland

A New Architecture for Cell Phones Sampling via Projection for UWB and AFB Systems

9/15/2020: Martha Dusenberry Pohl, American University

Further Visualizations of the Census: ACS, Redistricting, Names Files; & HMDA using Shapefiles, BISG, Venn Diagrams, Quantification, 3-D rotation, & Animation

9/22/2020: Michael Baron, American University

Statistical analysis of epidemic counts data: modeling, detection of outbreaks, and recovery of missing data

9/29/2020: Soutrik Mandal, Division of Cancer Epidemiology and Genetics (National Cancer Institute, National Institutes of Health)

Incorporating survival data to case-control studies with incident and prevalent cases

10/13/2020: John P. Nolan, American University

Hitting objects with random walks

10/20/2020: Yuri Levin-Schwartz, Icahn School of Medicine at Mount Sinai

Machine learning improves estimates of environmental exposures

10/27/2020: Nathalie Japkowicz, American University

Harnessing Dataset Complexity in Classification Tasks

11/10/2020: Anthony Kearsley, NIST

11/17/2020: Donna Dietz, American University

An Analysis of IQ-Link (TM)

Spring 2020

1/21/2020: Justin Pierce, Federal Reserve Washington DC

Examining the Decline in US Manufacturing Employment

1/28/2020: Ruth Pfeiffer, Biostatistics Branch, National Cancer Institution, NIH

Sufficient dimension reduction for high dimensional longitudinally measured biomarkers

2/11/2020: Erica L. Smith, Bureau of Justice Statistics, US Dept. of Justice

Overview of Department of Justice Statistical Data Collections Related to Gun Violence

2/18/2020: Sudip Bhattacharjee, University of Connecticut

A Text Mining and Machine Learning Platform to Classify Businesses into NAICS codes

2/25/2020: Avi Bender, National Technical Information Service (NTIS)

Data Science Skills for Delivering Mission Outcome: An interactive discussion with the Director of the National Technical Information Service (NTIS), US Department of Commerce

Fall 2019

9/10/2019: Dr. Scott Parker, American University

Some useful information about the Wilcoxon-Mann Whitney test and effect size measurement

9/17/2019: Dr. Michael Robinson, American University

Radio Fox Hunting using Sheaves

9/24/2019: Dr. Zois Boukouvalas, American University

Data Fusion in the Age of Data: Recent Theoretical Advances and Applications

10/8/2019: Dr. Elizabeth Stuart, Johns Hopkins

Assessing and enhancing the generalizability of randomized trials to target populations

10/15/2019: Latif Khalil, JBS International, Inc.

Entity Resolution Techniques to Significantly Improve Healthcare Data Quality

10/22/2019: Dennis Lucarelli, American University

Steering qubits, cats and cars via gradient ascent in function space

10/29/2019: Michael Thieme, Assistant Director for Decennial Census Programs, Systems and Contracts

Census 2020 - Clear Vision for the Country

11/12/2019: John Eltinge, US Census Bureau

Transparency and Reproducibility in the Design, Testing, Implementation and Maintenance of Procedures for the Integration of Multiple Data Sources

11/19/2019: Xander Faber, Institute for Defense Analyses, Center for Computing Sciences